Why Deepseek's R1 is important

Deepseek R1 is a industry shattering model due to its cost and the fact its open source, allowing everyday people to run enterprise level LLMs at home, for free

Right now the world's focus is on Deepseek and their latest R1 reasoning model, why?

R1 is an open source Large Language Model (LLM) which "thinks" before returning proper output, this type of model is extremely good at complex tasks such as coding, maths, etc. It breaks down the prompt and the possible requirements, which makes responses more accurate and relevant to the prompt, all of which are common pitfalls with LLMs.

What makes R1 so special?

It's open source

R1 uses the MiT license, which allows for users to use, modify, and distribute software for any purpose INCLUDING COMMERCIAL meaning literally anyone can download it, run it, edit it, do whatever they want with it, all without legal repercussions, this can already be observed with Uncensored models like this which have removed any content policy guidelines, allowing for any content to be produced.

It's so damn cheap

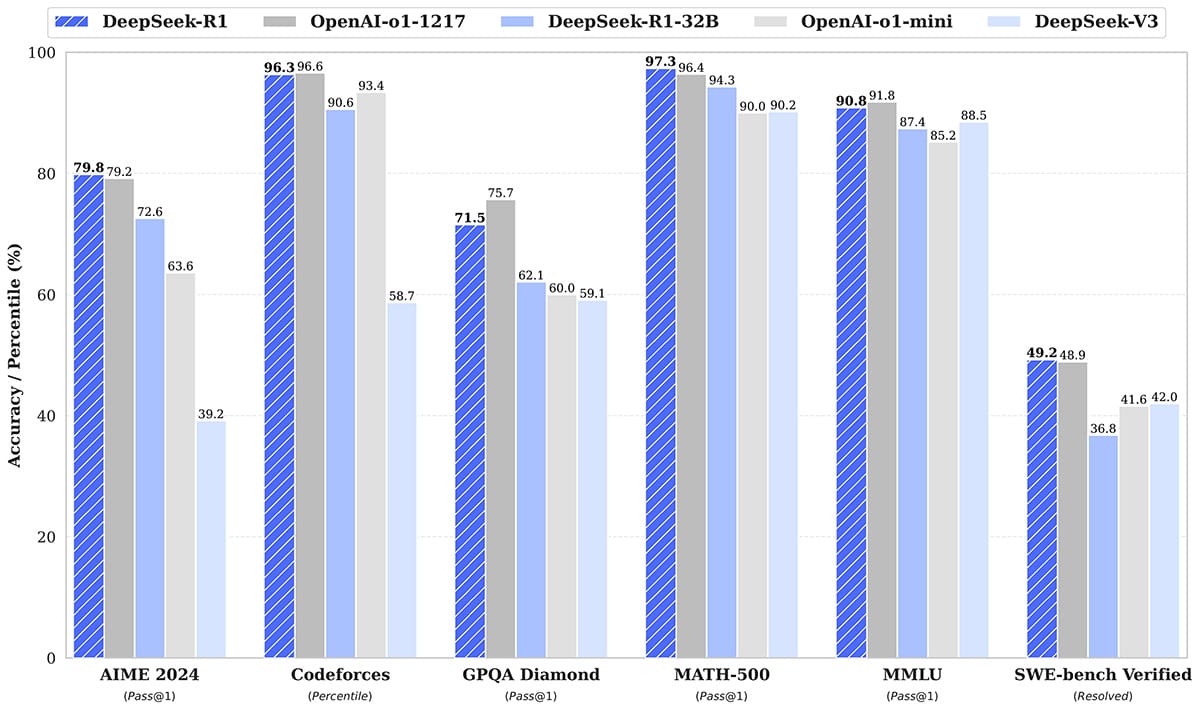

R1 is about 30x cheaper in terms of token costs when compared to OpenAI's O1 model, increased token usage due to how the model "thinks" means costs works out closer to 4x cheaper, which is still INSANE, in addition to this, R1 is comparable to O1 (see image 1) while also BEATING O1 in select categories. All this while only costing Deepseek 5.7 Million dollars to train R1, For contrast, according to various reports, OpenAI has spent 50 - 200 Million dollars to train GPT4, not even O1

Everyday people can run it

Now that a cheap and open source model exists, everyday people are running it and big AI companies are petrified, OpenAI, Anthropic, Meta and other relevant LLM platforms rely on everyday people being unable to afford the hardware required to run LLM models.

But now, you can run a Deepseek finetuned instance of LLama3 (not the deepseek R1 itself but a version of LLama3 that has been enhanced using deepseek R1) that can run on 600 USD hardware (base model m4 mac mini). THAT is why Nvidia's stock price dipped 10% at the time of writing, losing Nvidia 600 Billion dollars off their market cap.

What this means for you

Deepseek has shattered the industry, demonstrating exceptional price to result for both model training and usage. We will see a rise in easy local LLM setup & usage, maybe to the point where people stop paying for a chatgpt subscription but instead have their own model running at home, possibly even a family shared one. I anticipate more hardware to release soon that is dedicated to hosting LLMs, in addition to further development in running models locally on mobile devices, not only meaning you could run it without an internet connection, but also allowing for truly private communication with AI models.

Final words

We live in exciting times, I'm thrilled with the release of R1, I spent the better part of a day playing with it and its uncensored variants, I firmly believe we are entering a new age where ChatGPT and related services become less relevant, replaced by people running their own versions for free. If this occurs, many people's worries with AI will reduce to nothing because you would have total control, no one wants OpenAI snooping on their conversations, using their data for training LLMs or better yet, selling it.

Thanks for reading! Feel free to click the subscribe button to sign up for my newsletter, you'll receive emails whenever i post new content so you never miss a thing! have an amazing rest of your day!